You are hereAlibaba Cloud's Qwen LLM 2.5 challenges OpenAI Gpt-4o

Alibaba Cloud's Qwen LLM 2.5 challenges OpenAI Gpt-4o

In the rapidly evolving landscape of artificial intelligence, Alibaba Cloud has made a significant announcement with the release of its Qwen 2.5 models. This latest iteration of the Qwen series promises to deliver enhanced performance, efficiency, and capabilities in natural language processing (NLP) tasks. Below overviews the Qwen 2.5 models features, comparing them with other notable AI models, including DeepSeek, OpenAI’s GPT-4O, Moonshot’s Kimi 1.5, Mixtral and Claude’s latest version.

Alibaba Cloud Qwen 2.5 Models

The Qwen 2.5 models are designed to provide a robust and scalable solution for NLP tasks, such as text classification, sentiment analysis, and language translation. These models are built on top of Alibaba Cloud’s proprietary AI framework, which enables efficient processing and deployment of large-scale AI workloads. Curently released models include 100 Open-sourced Qwen 2.5 Multimodal Models. These models can perform a number of text and image analysis tasks. The models can parse files, count objects in images, understand videos, as well as control PC operations. These updates was revealed at the Apsara Conference, Alibaba cloud’s annual flagship event. Event also unveiled a revamped full-stack infrastructure designed to meet the growing demands for latest AI computing, new data center architecture which includes new cloud products and services that enhance computing, networking etc.

The newly released open-source Qwen 2.5 models, ranges from 0.5 to 72 billion parameters in size, improves capabilities in math and coding and are able to support over 29 languages. Company informed that Qwen models have surpassed 40 million downloads. open-source Qwen 2.5 model range includes base models, instruct models, and quantized models, cover functionalities such as language, audio, vision, code and mathematical models.

The Qwen 2.5 models are designed to provide a robust and scalable solution for NLP tasks, such as text classification, sentiment analysis, and language translation. These models are built on top of Alibaba Cloud’s proprietary AI framework, which enables efficient processing and deployment of large-scale AI workloads.

Alibaba Cloud Qwen 2.5 Features

- Improved accuracy: The Qwen 2.5 models have demonstrated significant improvements in accuracy across various NLP tasks, thanks to the incorporation of advanced techniques such as attention mechanisms and transformer architectures.

- Enhanced efficiency: The models are optimized for efficient processing, allowing for faster inference times and reduced computational resources.

- Increased scalability: The Qwen 2.5 models can be easily scaled up or down to accommodate varying workloads, making them suitable for a wide range of applications.

- Support for multiple languages: The models support multiple languages, including English, Chinese, Spanish, French, and many others.

Alibaba cloud in their Apsara Conference also announced image generator, Tongyi Wanxiang large model family features advanced diffusion transformer (DiT) architecture, is capable of generating high-quality videos with realistic scenes or 3D animation in a variety of visual styles. The model can generate videos with chinese/English text prompt and can transform static images into dynamic videos with enhanced video reconstruction quality.

Qwen 2.5 Comparison with Other AI Models

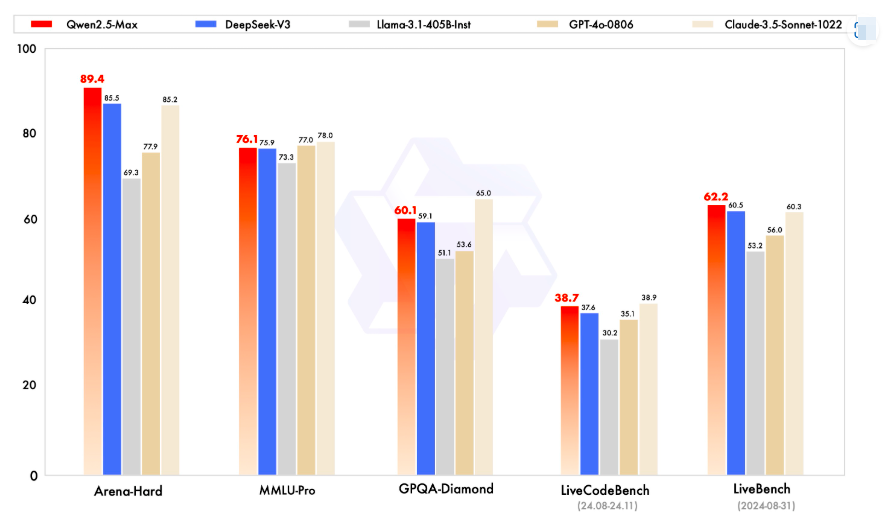

Qwen 2.5 Max Comparison with other notable AI models in the industry (as per Alibaba cloud benchmark results)

DeepSeek

DeepSeek is a popular AI model from deepseek team, while DeepSeek has demonstrated impressive performance in various tasks, it lags behind the Qwen 2.5 models in terms of efficiency and scalability.

-

Accuracy: DeepSeek has achieved state-of-the-art results in certain NLP tasks, but the Qwen 2.5 models have demonstrated comparable or superior performance in many cases.

Efficiency: The Qwen 2.5 models are optimized for efficient processing, making them more suitable for large-scale deployments. -

Scalability: The Qwen 2.5 models can be easily scaled up or down, whereas DeepSeek requires more significant computational configurations.

OpenAI’s GPT-4o

OpenAI’s GPT-4O is a highly advanced language model that has garnered significant attention in the AI community. While GPT-4O has demonstrated impressive capabilities in language understanding and generation, it falls short of the Qwen 2.5 models

-

Accuracy: GPT-4 has achieved state-of-the-art results in certain NLP tasks, but the Qwen 2.5 models have demonstrated comparable or superior performance in many cases.

Efficiency: The Qwen 2.5 models are optimized for efficient processing, making them more suitable for large-scale deployments. -

Scalability: The Qwen 2.5 models can be easily scaled up or down, whereas GPT-4 requires more significant computational resources.

Moonshot’s Kimi 1.5 LLM AI

Moonshot’s Kimi 1.5 is a relatively new AI model that has shown promising results in NLP tasks. While Kimi 1.5 has demonstrated impressive performance, it lags behind the Qwen 2.5 models in terms of efficiency and scalability.

- Accuracy: Kimi 1.5 has achieved competitive results in certain NLP tasks, but the Qwen 2.5 models have demonstrated comparable or superior performance in many cases.

- Efficiency: The Qwen 2.5 models are optimized for efficient processing, making them more suitable for large-scale deployments.

- Scalability: The Qwen 2.5 models can be easily scaled up or down, whereas Kimi 1.5 requires more significant computational resources.

Mixtral AI

Mixtral is a popular AI model that has shown promising results in NLP tasks. While Mixtral has demonstrated impressive performance, it lags behind the Qwen 2.5 models

* Accuracy: Mixtral has achieved competitive results in certain NLP tasks, but the Qwen 2.5 models have demonstrated comparable or superior performance in many cases.

* Efficiency: The Qwen 2.5 models are optimized for efficient processing, making them more suitable for large-scale deployments.

* Scalability: The Qwen 2.5 models can be easily scaled up or down, whereas Mixtral requires more effort

Claude AI

- Accuracy: Both models have demonstrated impressive accuracy in NLP tasks, but the Qwen 2.5 models have shown slightly better performance in certain tasks, such as text classification and sentiment analysis.

- Efficiency: Qwen 2.5 models have demonstrated slightly faster inference times and reduced computational resources.

- Scalability: The Qwen 2.5 models can be easily scaled up or down to accommodate varying workloads, making them more suitable for large-scale deployments.

- Language support: Both models support multiple languages, but the Qwen 2.5 models have demonstrated better performance in certain languages, such as Chinese and Spanish.